8 Advanced parallelization - Deep Learning with JAX

Por um escritor misterioso

Last updated 16 junho 2024

Using easy-to-revise parallelism with xmap() · Compiling and automatically partitioning functions with pjit() · Using tensor sharding to achieve parallelization with XLA · Running code in multi-host configurations

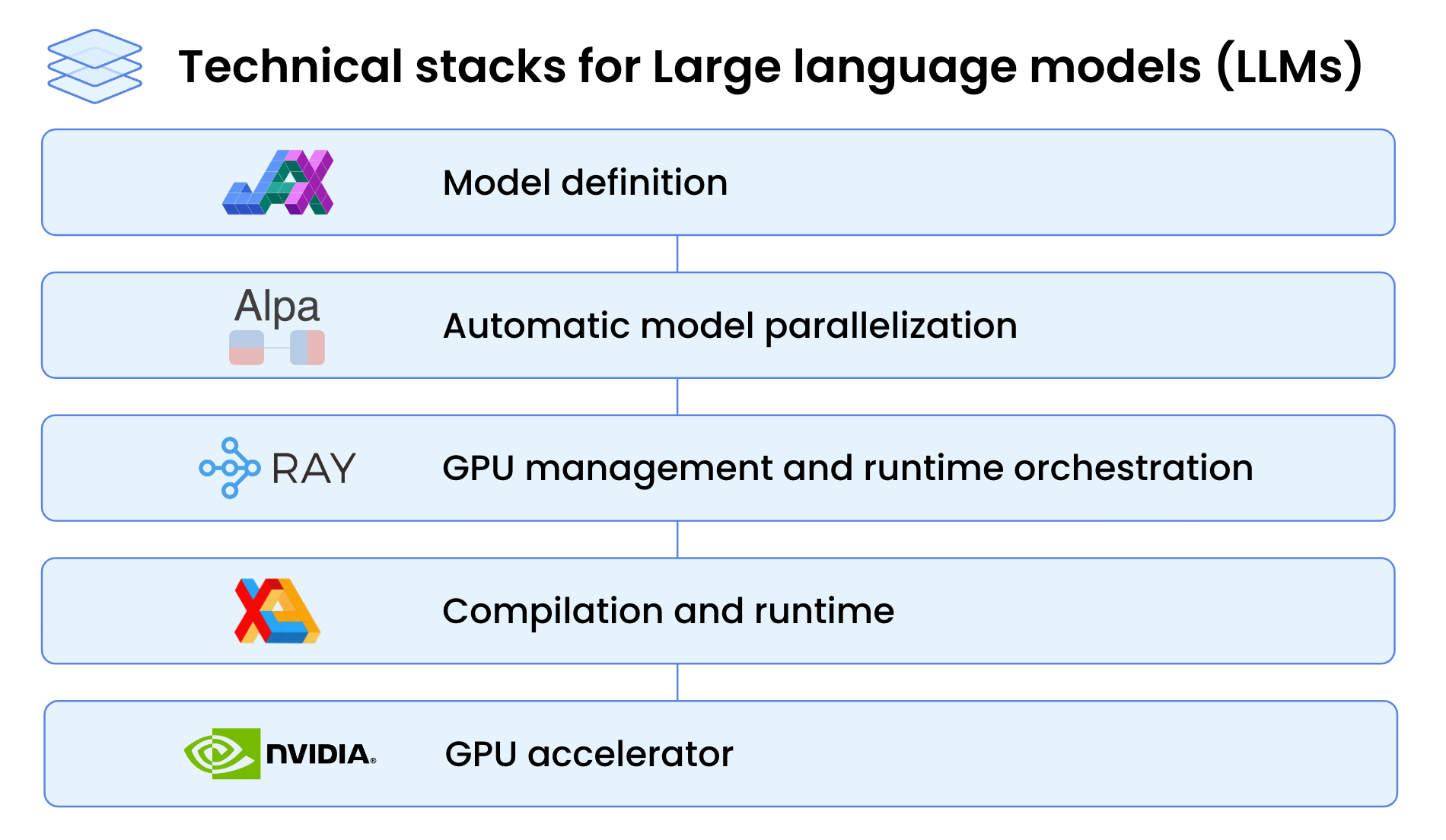

High-Performance LLM Training at 1000 GPU Scale With Alpa & Ray

Efficiently Scale LLM Training Across a Large GPU Cluster with

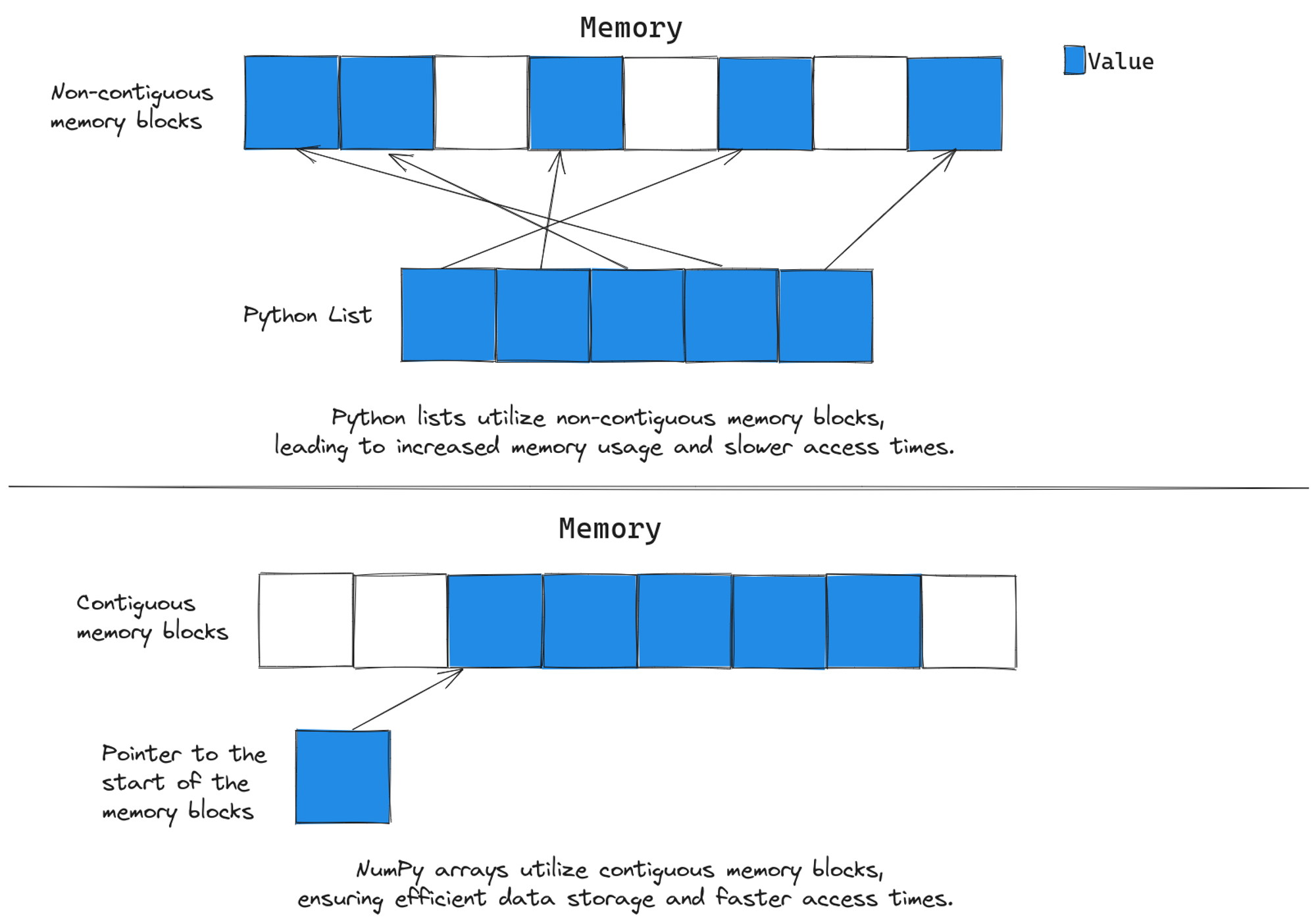

Breaking Up with NumPy: Why JAX is Your New Favorite Tool

Breaking Up with NumPy: Why JAX is Your New Favorite Tool

Running a deep learning workload with JAX on multinode multi-GPU

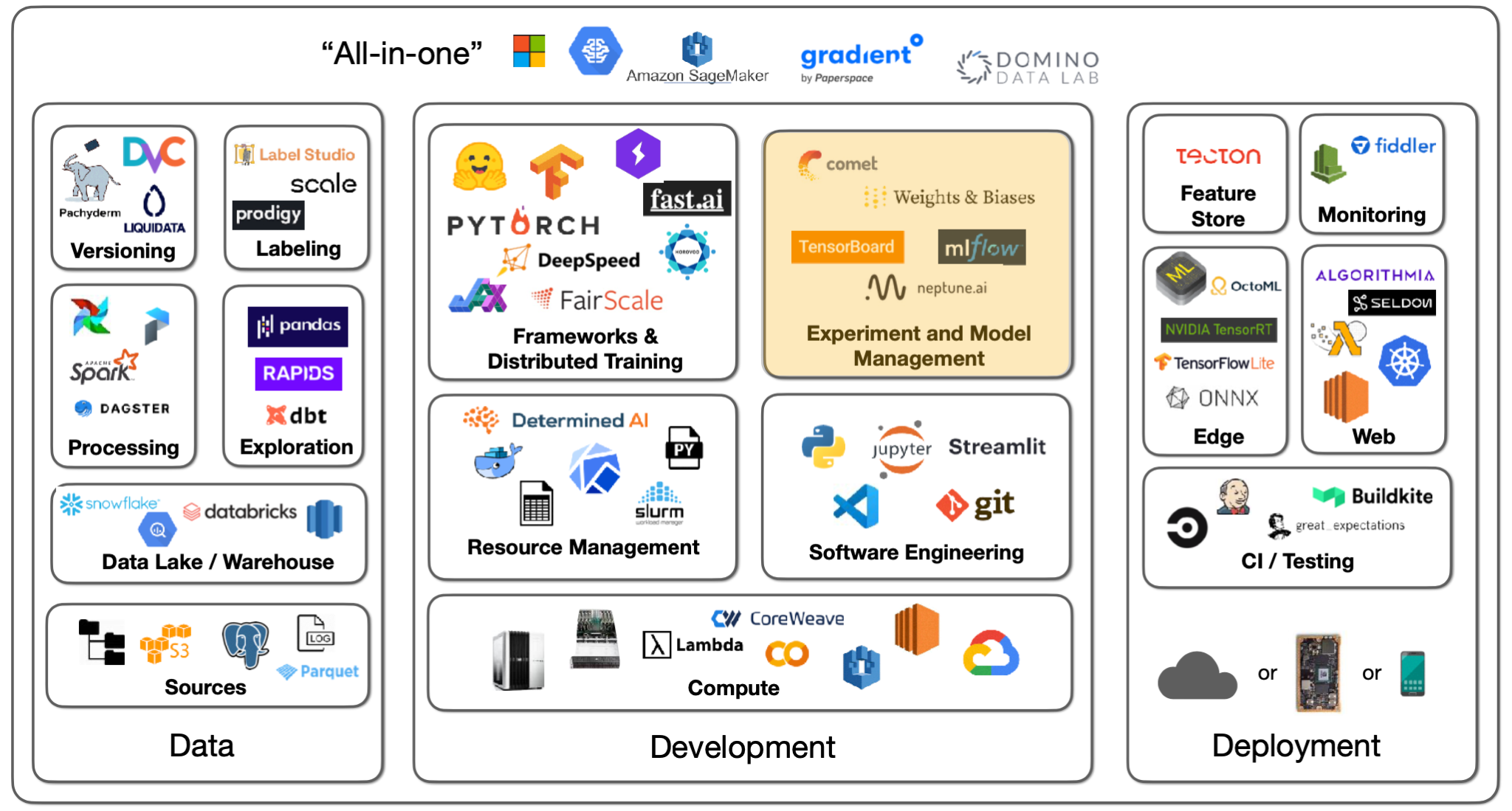

Lecture 2: Development Infrastructure & Tooling - The Full Stack

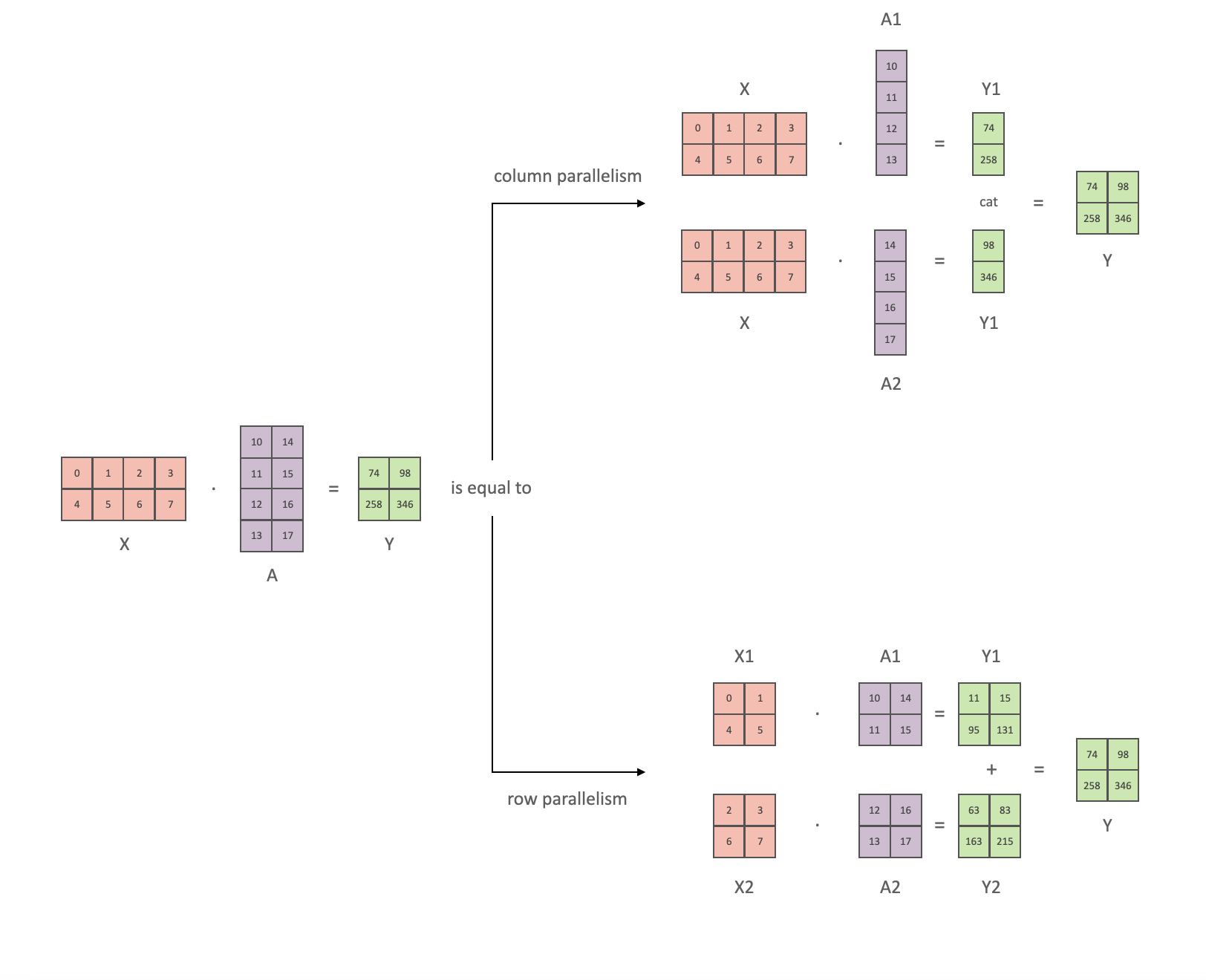

Model Parallelism

7 Parallelizing your computations - Deep Learning with JAX

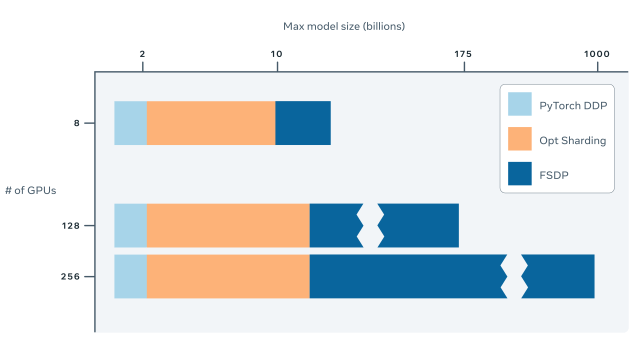

Fully Sharded Data Parallel: faster AI training with fewer GPUs

Why You Should (or Shouldn't) be Using Google's JAX in 2023

Compiler Technologies in Deep Learning Co-Design: A Survey

Compiler Technologies in Deep Learning Co-Design: A Survey

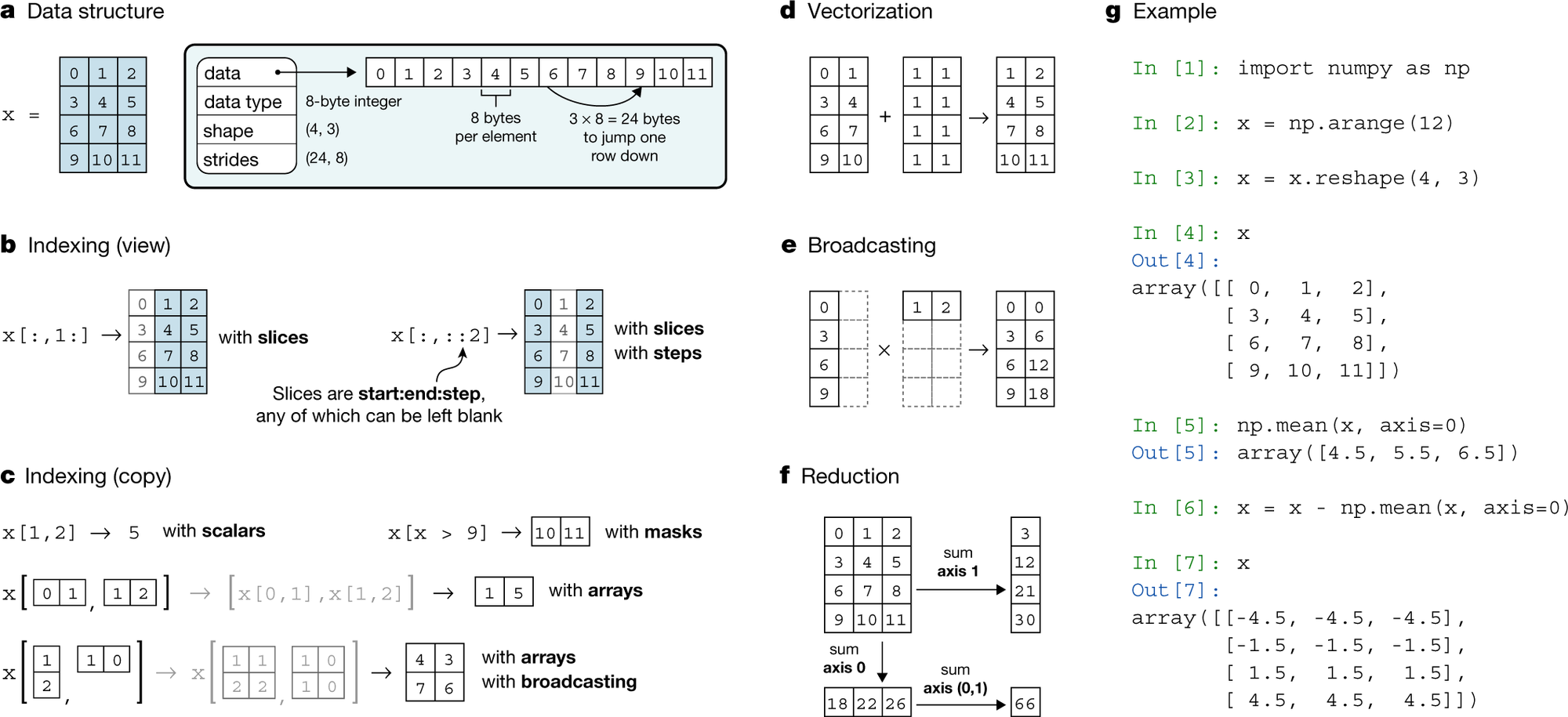

Machine Learning in Python: Main developments and technology

Recomendado para você

-

SCP-10000 World of Anthros, Wiki16 junho 2024

SCP-10000 World of Anthros, Wiki16 junho 2024 -

UNDERSTANDING POROSITY FORMATION AND PREVENTION WHEN WELDING16 junho 2024

UNDERSTANDING POROSITY FORMATION AND PREVENTION WHEN WELDING16 junho 2024 -

Polaroid Vs. Kodak16 junho 2024

Polaroid Vs. Kodak16 junho 2024 -

PDF) A novel local search for unicost set covering problem using16 junho 2024

PDF) A novel local search for unicost set covering problem using16 junho 2024 -

Power Bank 10000 Mah Carga Rápida Master-G - Electronicalamar16 junho 2024

Power Bank 10000 Mah Carga Rápida Master-G - Electronicalamar16 junho 2024 -

AES E-Library » Complete Journal: Volume 20 Issue 1016 junho 2024

AES E-Library » Complete Journal: Volume 20 Issue 1016 junho 2024 -

Configuring Destination Tables16 junho 2024

-

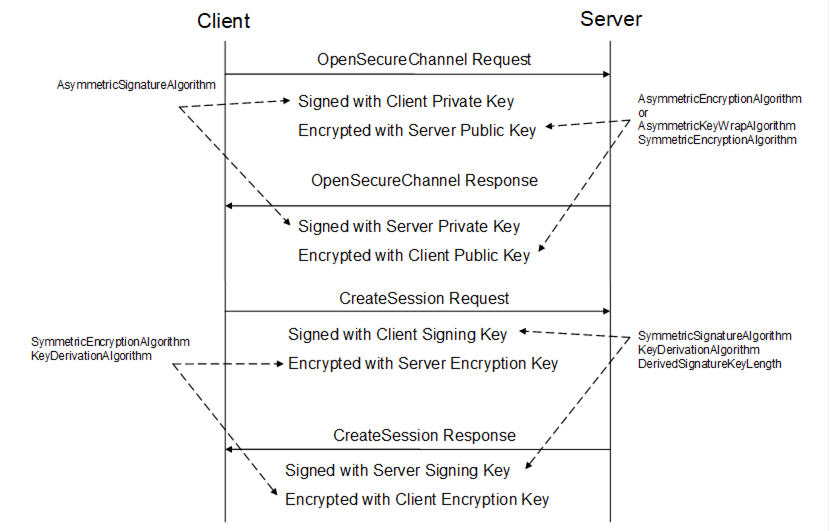

UA Part 6: Mappings - 6 Message SecurityProtocols16 junho 2024

UA Part 6: Mappings - 6 Message SecurityProtocols16 junho 2024 -

PDF) An Ant Colony based Hyper-Heuristic Approach for the Set16 junho 2024

PDF) An Ant Colony based Hyper-Heuristic Approach for the Set16 junho 2024 -

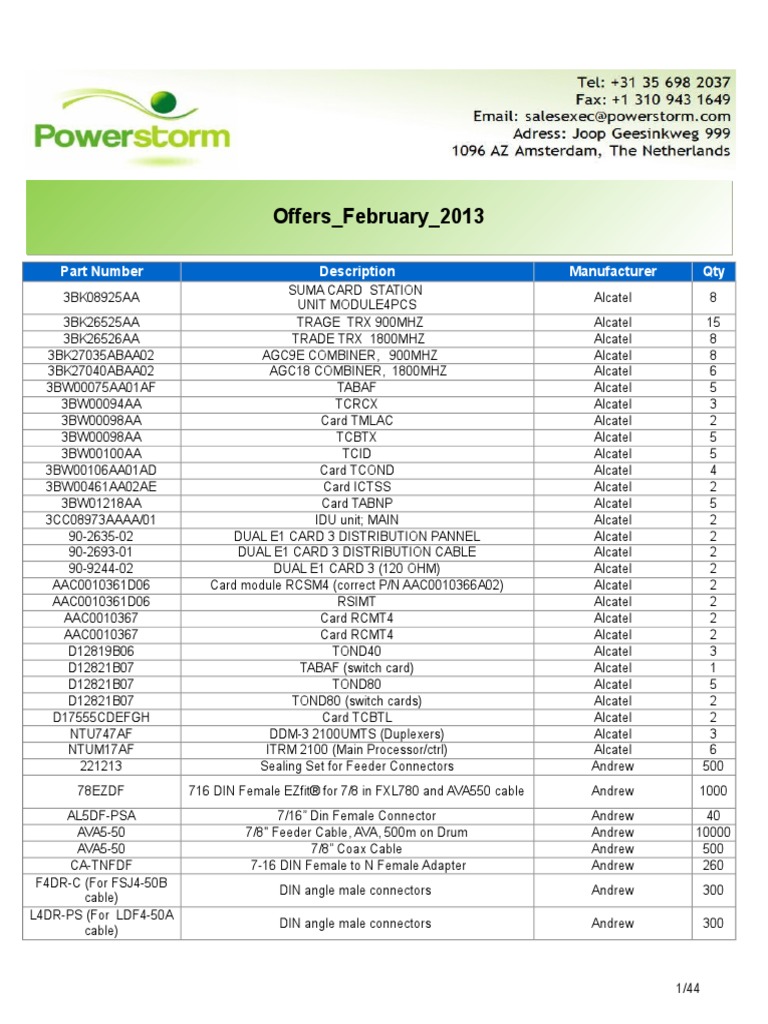

Offers February 2013 Powerstorm16 junho 2024

você pode gostar

-

Ben Tennyson, Wiki Dynami Battles16 junho 2024

Ben Tennyson, Wiki Dynami Battles16 junho 2024 -

Wishing Compass not pointing towards Khazad-Dum16 junho 2024

Wishing Compass not pointing towards Khazad-Dum16 junho 2024 -

Giri DESTRÓI Gukesh com SACRIFÍCIOS BRILHANTES16 junho 2024

Giri DESTRÓI Gukesh com SACRIFÍCIOS BRILHANTES16 junho 2024 -

JoJo's Bizarre Adventure: Golden Wind (Season 5: VOL.1 - 39 End) ~ All Region ~16 junho 2024

JoJo's Bizarre Adventure: Golden Wind (Season 5: VOL.1 - 39 End) ~ All Region ~16 junho 2024 -

Powerwolf - Apple Music16 junho 2024

Powerwolf - Apple Music16 junho 2024 -

Join a Game - Quizizz Quizzes, Online quizzes, Free quizzes16 junho 2024

Join a Game - Quizizz Quizzes, Online quizzes, Free quizzes16 junho 2024 -

One Piece: live action vs manga - Sportskeeda Stories16 junho 2024

One Piece: live action vs manga - Sportskeeda Stories16 junho 2024 -

Sandwich Monday: Papa John's Frito Chili Pizza : The Salt : NPR16 junho 2024

Sandwich Monday: Papa John's Frito Chili Pizza : The Salt : NPR16 junho 2024 -

Códigos de Guerra - Filme 2001 - AdoroCinema16 junho 2024

Códigos de Guerra - Filme 2001 - AdoroCinema16 junho 2024 -

Kamigami No Asobi: The Complete Collection (Blu-ray)16 junho 2024

Kamigami No Asobi: The Complete Collection (Blu-ray)16 junho 2024