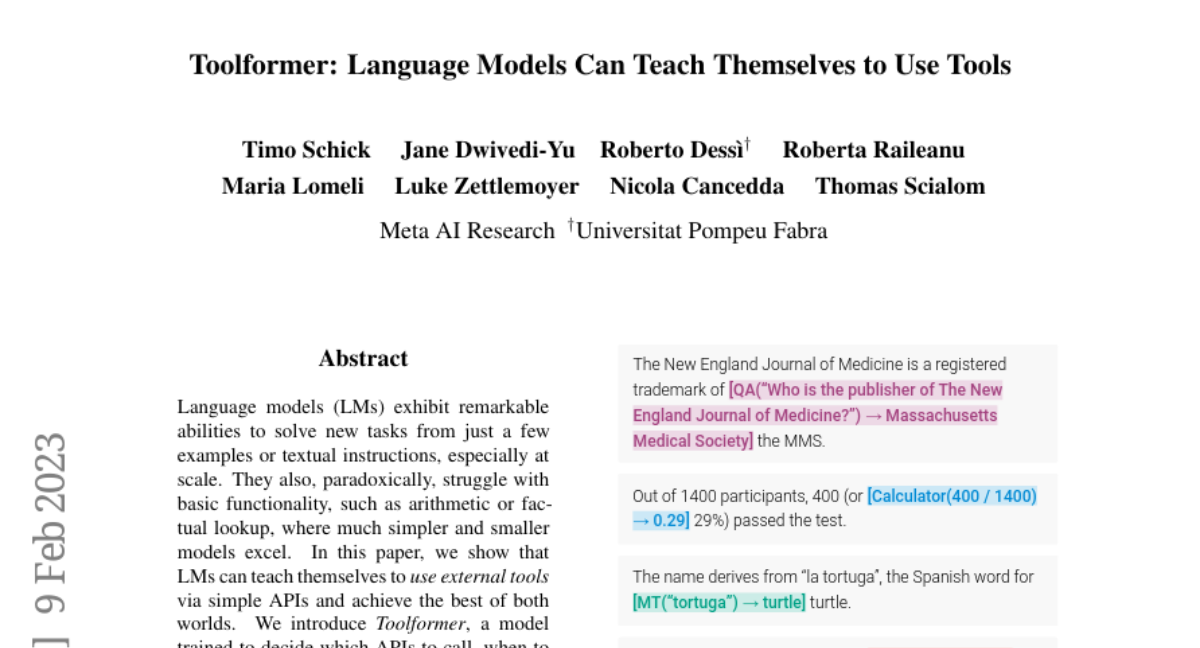

Timo Schick (@timo_schick) / X

Por um escritor misterioso

Last updated 01 março 2025

Attentive Mimicking: Better Word Embeddings by Attending to Informative Contexts - ACL Anthology

Timo Schick (@timo_schick) / X

Timo Schick on X: 🎉 With quite some delay, I'm happy to annouce that Automatically Identifying Words That Can Serve as Labels for Few-Shot Text Classification (w/ Helmut Schmid & @HinrichSchuetze) has

BERTRAM: Improved Word Embeddings Have Big Impact on Contextualized Model Performance - ACL Anthology

Emanuele Vivoli (@EmanueleVivoli) / X

Fabio Petroni (@Fabio_Petroni) / X

Exploiting Cloze-Questions for Few-Shot Text Classification and Natural Language Inference - ACL Anthology

Timo Schick (@timo_schick) / X

timoschick (Timo Schick)

Alexandre Salle (@alexsalle) / X

Timo Schick (@timo_schick) / X

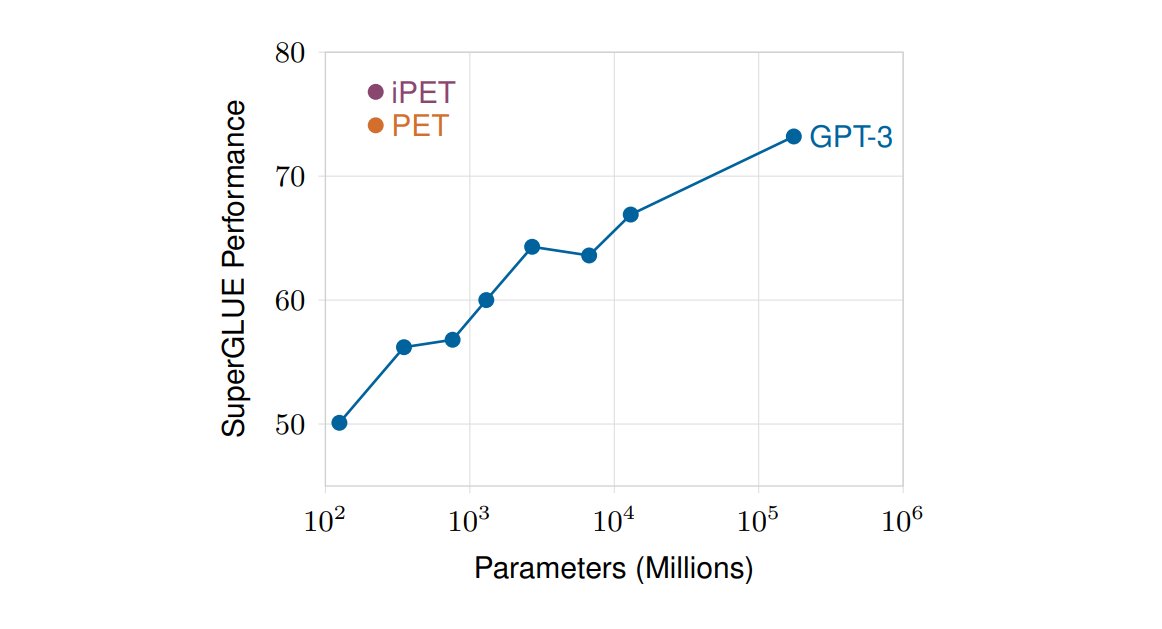

Timo Schick on X: 🎉 New paper 🎉 We show that language models are few-shot learners even if they have far less than 175B parameters. Our method performs similar to @OpenAI's GPT-3

Timo Schick (@timo_schick) / X

timoschick (Timo Schick)

Recomendado para você

-

Gamma Technologies to Acquire FEMAG Software for Electric Machine Applications01 março 2025

Gamma Technologies to Acquire FEMAG Software for Electric Machine Applications01 março 2025 -

Gamma Technologies on LinkedIn: Register for the 2023 GTTC events in the US and EU. Submit your…01 março 2025

-

FEMAG Transportes01 março 2025

-

KSL Esplanade Mall01 março 2025

KSL Esplanade Mall01 março 2025 -

M+, Essay01 março 2025

M+, Essay01 março 2025 -

May 2023 – Page 2 – Little Bits of Gaming & Movies01 março 2025

May 2023 – Page 2 – Little Bits of Gaming & Movies01 março 2025 -

Mola De Topo 5563 Latão Cromado Femag - Duda Ferragens01 março 2025

Mola De Topo 5563 Latão Cromado Femag - Duda Ferragens01 março 2025 -

FY 2022 SAFER Grant Application Period Set to Open01 março 2025

FY 2022 SAFER Grant Application Period Set to Open01 março 2025 -

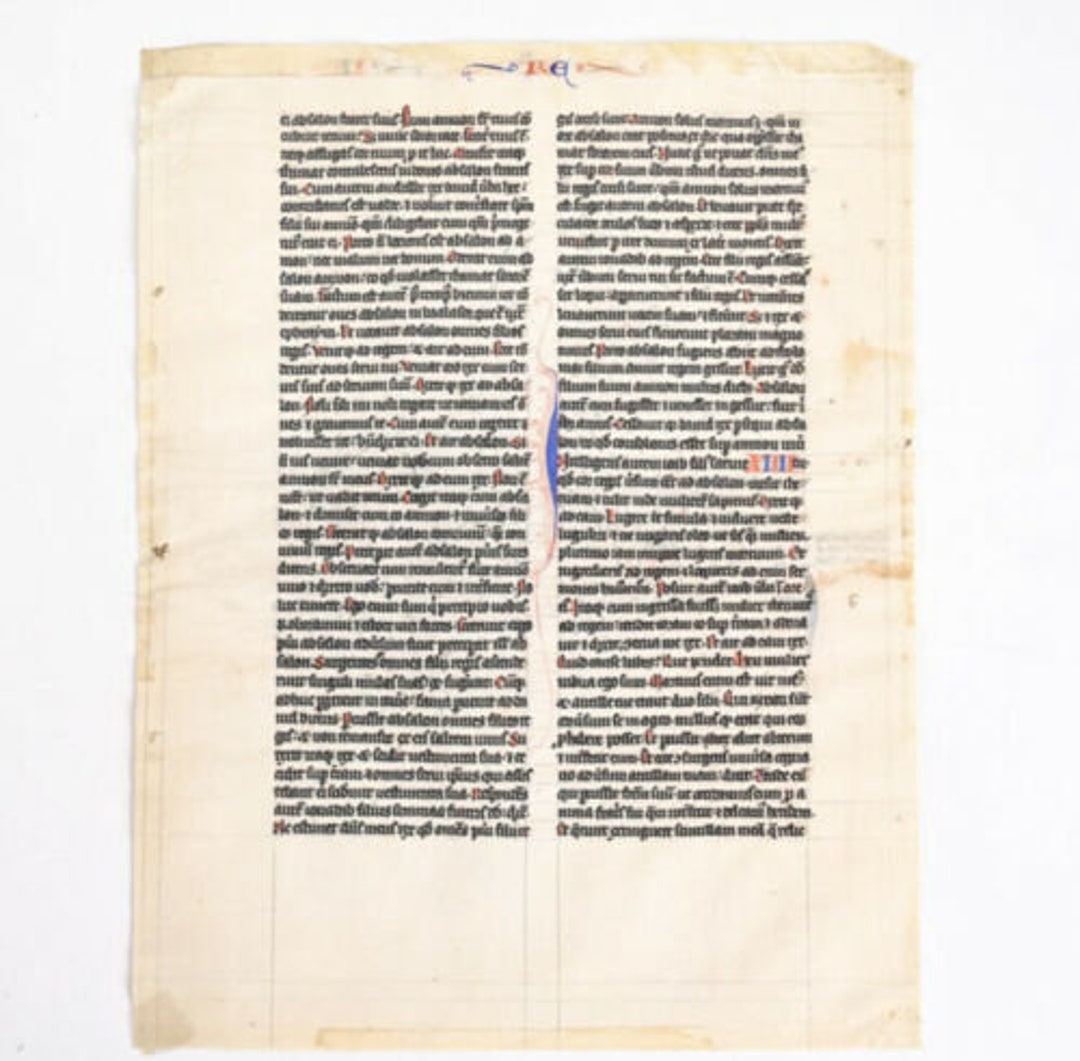

13th Century French Illuminated Manuscript Leaf 9 X01 março 2025

13th Century French Illuminated Manuscript Leaf 9 X01 março 2025 -

Eliminatórias FEMAG 2023 Dia 1. Crédito Hygor Abreu.01 março 2025

Eliminatórias FEMAG 2023 Dia 1. Crédito Hygor Abreu.01 março 2025

você pode gostar

-

Dominus Venari, The Official Roblox Event Wiki01 março 2025

Dominus Venari, The Official Roblox Event Wiki01 março 2025 -

XMAS] THE HOUSE TD - Roblox01 março 2025

-

Halo: The Master Chief Collection review – 'an absolute monolith01 março 2025

Halo: The Master Chief Collection review – 'an absolute monolith01 março 2025 -

Tower of God WEBTOON01 março 2025

Tower of God WEBTOON01 março 2025 -

stand upright rebooted trade|TikTok Search01 março 2025

stand upright rebooted trade|TikTok Search01 março 2025 -

PS2 Copy Game Disc GTA Series Unlock Console Station 2 Retro01 março 2025

PS2 Copy Game Disc GTA Series Unlock Console Station 2 Retro01 março 2025 -

Calendário Gregoriano: o que é, como surgiu e características01 março 2025

Calendário Gregoriano: o que é, como surgiu e características01 março 2025 -

.jpg) Carros automáticos com ar-condicionado até R$ 40 mil reais01 março 2025

Carros automáticos com ar-condicionado até R$ 40 mil reais01 março 2025 -

Tokyo Revengers Season 2 Episode 13 Review: The Last Revenge01 março 2025

Tokyo Revengers Season 2 Episode 13 Review: The Last Revenge01 março 2025 -

Kira Roblox Rainbow Friends Aniversário Decoração De Festa Tema De Pano De Fundo Fotográfico - Escorrega o Preço01 março 2025

![XMAS] THE HOUSE TD - Roblox](https://tr.rbxcdn.com/ceac3911238fafffa511b0a030eef69e/768/432/Image/Png)